I was asked the other day what was the connection between High Performance Computing (HPC) and Clouds, so I thought I would try to post an answer here. Let's first talk a little bit about HPC.

High Performance Computing is about finding every single flops and every single iops on the largest machine you can get your hands on, in order to run your code as fast as possible. It is about batch processing on as many cores as you can get, so you can solve the largest problem you are facing. For a while, supercomputers, were large shared memory machines but in the late nineties distributed memory systems appeared, they were cheaper and you could assemble lots of nodes to get hundreds of cpus. Today the Top500 supercomputers are ranked every 6 months, this ranking is the theater of great technological battle between countries, vendors, research labs and programmers. In the latest ranking, Sequoia the supercomputer from Lawrence Livermore National lab topped the ranking at 16.32 PetaFlop/s and 1,572,864 cores. Weather modeling, atomic weapons simulation, molecular dynamics, genomics and high energy physics are among those that benefit from HPC.

There is big difference however within HPC itself. It is the difference between applications that rely heavily on inter-process communication and need a low latency network for message passing, and applications where each process runs an independent task, the so-called embarrassingly parallel applications. (e.g Map-reduce is an example of how to express an embarrassingly parallel problem ). High Throughput Computing (HTC) defines the type of application where access to a large number of cores over a specific amount of time is needed. Protein folding popularized by the Folding@home project running on PS3 as well as desktops is a good example. Financial simulation such as stock price forecasting and portfolio analysis also tend to fall in that category due to their statistical nature. Graphics rendering for animated movies also falls under HTC. HTC cares less about performance -as measured by FLOPS- and more about productivity -as in processing lots of jobs-.

The HPC quest for performance seems totally antagonist with the IaaS layer of clouds, at least when one thinks of true HPC workload that consumes every flop. Virtualization, the key enabler of IaaS, introduces overhead, both in cpus and network latency, and thus has been deemed "evil" for true HPC. Despite directed I/O, pass thrus, VM pinning and other tuning possibilities to reduce the overhead of virtualization, you might think that this would be it, no connection between HPC and Clouds. However according to a recent academic study of hypervisor performance from a group at Indiana University, this may not be entirely true and it would also be forgetting the users and their specific workloads.

In november 2010 a new player in the Top 500 arrived: Amazon EC2. Amazon submitted a benchmark result which placed an EC2 cluster 233rd on the top 500 list. By June 2011, this cluster was down to rank 451. Yet it proved a point: that a Cloud based cluster could do High Performance computing, raking up 82.5 TFlops peak using VM instances and 10GigE network. In november 2011, Amazon followed with a new EC2 cluster ranked 42nd with 17,023 cores and 354 TFlops peak. This cluster is made of "Cluster Compute Eight Extra Large" instances with 16 cores, 60 GB of RAM and 10 GigE interconnect and now ranked 72nd. For $1000 per hour this allows users to get an on-demand HPC cluster that itself ranks in the top500. This is done on-demand and provides users with their personal cluster.

A leading HTC software company CycleComputing also demonstrated last April, the ability to provision a 50,000 cores cluster on all availability zones of AWS EC2. In such a setup, the user is operating in a HTC mode with little or no need for low latency networks and interprocess communication. Cloud resources seem to be able to fulfill both the traditional HPC need and the HTC need.

The high-end HPC users are few, they are the ones fighting for every bit of performance, but you also have the more "mundane" HPC user, the one who has a code that runs in parallel but who only needs a couple hundred cores, and the one who can tolerate a ~10% performance hit, especially if that means that he can run on hundreds of different machines across the world and thus reach a new scale in the number of tasks he can tackle. This normal HPC user tend to have an application that is embarrassingly parallel, expressed in a master worker paradigm where all the workers are independent. The workload may not be extremely optimized, it may wait for I/O quite a bit, it may be written in scripting languages, it is not a traditional HPC workload but it needs an HPC resource. This user wants on-demand/elastic HPC if his application and pricing permits and he needs to run his own operating system not the one imposed by the supercomputer operator. HPC as a Service if you wish. AWS has recognized this need and offered a service. The ease of use balances out the performance hit. For those users, the Cloud can help a lot.

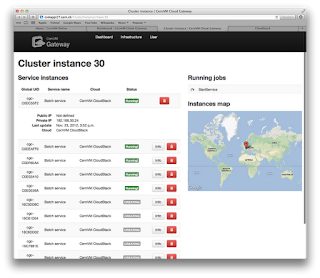

What you do need, is a well optimized hypervisor, potentially operated without multi-tenancy for dedicated access to network cards or GPUs, or a quick way to re-image part of a larger cluster with bare-metal provisioning. You also need a data center orchestrator that can scale to tens of thousands of hosts and manage part of the data centers in a hybrid fashion. All features are present in CloudStack, which leads me to believe that it's only a matter of time before we see our first CloudStack based virtual cluster in the Top500 list. This would be an exciting time for Apache CloudStack.

If you are already using CloudStack for HPC use cases, I would love to hear about it.