CERNVM inception: CERNVM started in 2007 and entered development in 2008, it has been 4 years in the making and the project is now wrapping up. The main concept was to create a virtual machine appliance that scientists could use on their Desktop. The appliance would have the latest analysis software needed to analyze the data coming out of the LHC. Building appliances for LHC has now become routine, and CERNVM comes in various flavors: VirtuaBox, VMware, Xen, KVM and Hyper-V. What strikes you when you download CERNVM is its small size (x100MB). This is made possible through the use of the CERNVM file system or CVMFS an http based read only file system optimized to deliver software applications to the appliances. CVMFS is now used widely throughout the LHC community. This file system is really a side artifact of the project but a very valuable one. I heard that a micro CERNVM is under development, it would be ~6MB in size and the entire software needed would be streamed via CVMFS.

Contextualization: With a very mature building procedure to build VM appliances and a highly performant file system to help distribute software in-time, what CERNVM was lacking was a way to provision hundreds/thousands of instances on large clusters. Around 2009/2010, the CERNVM team developed a batch edition that could be used for batch processing in clusters used for analysis of LHC data. This appliance was tested successfully on LXCLOUD. The biggest challenge for the appliances was the configuration or what is known in this community as contextualization (a term often attributed to the Nimbus project). Basically it amounts to configuring the instance based on what it is supposed to do. The team developed a very advanced contextualization system. To business data center people, this is for example a way to tell an instance which Puppet profile it is supposed to use, where the Puppet master is and what are the other instances that it should be aware of. In the case of EC2, the way to contextualize an image is to pass variables through the EC2_USER_DATA entry. With Opennebula this is also done using scripts placed in an ISO attached to the instance.

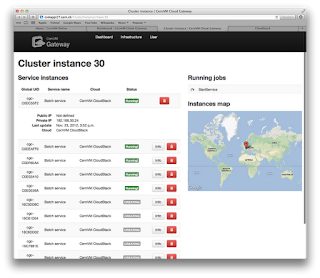

CERNVM Cloud: I had not talked to Predrag about the latest development in a while, and I was impressed by how far they had gone. They totally automatized the contextualization process, creating a web frontend CERNVM On-line that users can use to fill the parameter of the contextualization and creating profiles for instances, including specifying service offerings that tie to CloudStack service offerings. The kicker is that they tied it to a Gateway, the CERNVM Gateway (they got their branding right ! ), that allows users to start instances and define clusters of instances using specific profiles. While enterprise people think of VM profiles as database or webserver profiles, here the profiles tend to be Condor clusters for batch processing, MPI clusters for parallel computing, and PROOF clusters for LHC analysis. The combination makes up their Cloud. What I really like is that they moved up the stack, from building VM images to providing an end to end service for users. A one-click stop shop to write definitions of infrastructure and instantiate it. I think of it as a marketplace and a cloud management system all at once.

Internals: What technologists will love is how they combined a IaaS to their Gateway. They built on XMPP, the former chat and now more general messaging protocol. Predrag and I had talked about XMPP some time back and a former student had developed an entire framework (Kestrel) for batch processing in virtual machines. Couple of the many interesting aspects of XMPP, is its scalability, the ability to federate servers and the ability to communicate with agents that are behind NAT. Of course we can argue about XMPP vs AMQP, but this would have to be another post. What CERNVM ended up doing is creating a complete XMPP based framework to do Cloud Federation and communicate with Cloud IaaS APIs. An XMPP agent sits within the premise of a Cloud Provider and is responsible to start the instances using the Cloud platform API. The instances then contextualize themselves using a pairing mechanism that ties them back to the CERVNM Cloud. Their Cloud can be made of Public clouds, private clouds and even Desktops. Brilliant !

And CloudStack in all of this ?:

What of course made my day is that the Cloud platform they used to test their end-to-end system was Apache CloudStack 4.0. In the two screenshots, you see that they defined a CERNVM zone within CloudStack. Being a test system, the scale is still small with 48 cores, running a mix of CentOS 6.3 and Scientific Linux (CERN version) 6.3, they do have plans to scale up and considering their expertise and CloudStack proven scalability this should not be a problem. Their setup, is a basic Zone, with a straightforward NFS storage backend. What Ioannis Charalampidis (one of the developers that I met) told me, is that CloudStack was easy to install, almost a drop in action. He made it sound like a non-event. Ioannis did not mention any bugs or installation issues instead he asked for better EC2 support and the ability to define IP/MAC pairs for guest networks. A request I knew about from my LXCLOUD days. This is mostly a security feature to provide network traceability. I proceeded to point him to the Apache CloudStack JIRA instance and showed him how to submit a feature request. I look forward to see his feature requests in the coming days.Final thoughts:I came back from meeting with the CERNVM team thinking it was worth skipping the Turkey. It gave me a few ideas and showed me again the power of CloudStack and Open Source Software:

- Installing a Private of Public Cloud should be a non-event. Ioannis feedback really showed me that this was the case with CloudStack and that the hard work put forth by the community really paid off. From the source build with maven to the hosting of convenience binaries, installing CloudStack is a straightforward process (at least in a basic Zone configuration).

- An ecosystem is developing around CloudStack, we already knew this from the various partners that are participating in the Apache community and contributing, but we are also starting to see end-to-end scenarios like the CERNVM Cloud. I encouraged them to open source their XMPP framework and enhance it to generalize the profile definition. They could easily make it a marketplace for Puppet or Chef recipes and use non-CERNVM images that would pair with these recipes for automatic configuration, that would be even more exciting.

- One other aspect that also struck me, is that they developed a very enterprise looking system. Leveraging the Bootstrap javascript framework and Django, their UI is extremely intuitive, interactive and pleasing. Something rather rare in R&D projects focused on batch processing.

- Finally, I proposed to identify CloudStack based providers that would be willing to install their Gateway agent and share their resources with them. Participating in a Cloud Federation to find the God particle is a worth while endeavor !

No comments:

Post a Comment